Beginnings:

An Outsider approach to Artificial Intelligence

By Jonathan Standley, 2003

Part One: A quick look at some theories and philosophies regarding artificial intelligence

The following snippet from an e-mail I sent to a member of the KurzweilAI.net MindX Forum was the first time I articulated what was the underpinning of my first serious attempts at creating a theoretical model of a mind and then designing a buildable system based on said model.

|

Everything

("instructions" and "data") is expressed in a symbolic

language. This language is based on logic and mathematics, and is highly

reductive. The language is "Godel Minimal" (my terminology)

in that it is a logically consistent system that contains a bare minimum of

external references. All concepts are reduced to constructions of the

external references. These unprovable references (I guess in math you

would call them postulates) are used to construct a concept of

"I". "I" is then treated as an external reference

and is used as the root concept of ALL concepts.... see where this is

going :) ? I don't

have enough of a background in math to be sure that my symbolic language is

fully reductive, but I think the

Idea is solid. Everything

in the AI is in this symbolic language (SL). Sensory input is reduced

to SL expressions. Memories, ideas, beliefs, facts, are all SL

constructs; the "data" and "instructions" are

differentiated solely by context... 11/29/02 |

I went along this path for a while, building more and more complex models around this highly formalized concept. This led to some interesting ideas and creations, one of which was a symbolic language designed to be built up from “Godel Minimal” logical elements. I called this Internal Symbolic Language; an incomplete rough outline of it is what I have linked to here, in the AI section of my site.

At the same time, a subject on my mind was that of ethics, and how to build an ethical mind. This was the spark of the design philosophy that today (02/08/03) is one of the core components of my current AGI system architecture:

|

A healthy mind needs to be 11/29/02 |

BTW, both of the above email excerpts are from messages to the same person; until (if) I have his permission, I’m not going to post his address or alias here. As well, both messages I sent on the same day. A lot of very cool (IMO :) stuff came about in the couple weeks following those 2 mails. Much of it was hardware architectures designed to support the gargantuan processing requirements of the ISL-based AI architecture I was working on at the time. These architectures are quite different from what is out there today. I’m going to discuss these architectures here, but in a little ways to come. The following excerpt is from the [agi] mailing list, owned and administrated by Ben Goertzel. The red text is a section of someone else’s post, which the green portion (mine) is in response to.

|

"The idea of

putting a baby AI in a simulated world where it might learn 12/12/02 |

A little notebook sketch I

did a couple years ago. I think it

nicely illustrates the idea of feedback as critical to self-awareness. |

I included the above to show the direction my thoughts on subjective experience in relation to behavior were going. There are numerous factual errors in the above post. But the core idea, that subjective experience arises from changes and states in neural activity patterns, is in my estimation nonetheless correct. This is extremely important, because it separates the subjective experiences’ nature from the nature of it’s physical cause, i.e. chemical signaling via neurotransmitters. It implies that any such complex, massively interconnected dynamic system can give rise to subjective experience regardless of its physical substrate. The two messages below, posted to the SL4.org mailing list, are recent and show how my thoughts on this matter have evolved. As usual, red text is by others, green is by me.

|

I posted this on the wta-arts yahoo group

today; I was wondering what your 02/06/03 |

You wrote:

|

The three last emails all deal extensively with a topic

that many choose to skirt around or avoid entirely, that of qualia (wikipedia). Admittedly qualia are a very

tricky and slippery concept. Indeed,

the classic example of the problem of qualia, “how does one describe color to a

man who has been blind since birth?” illustrates the issue quite succinctly. IMO, however it must ultimately be addressed

is some fashion, whether it be by AI researchers, neuroscientists, or

psychologists, the who doesn’t truly matter.

I’ve decided to tackle the problem head-on, as have some others

recently. One well-known thinker, Sir Roger Penrose,

has delivered a well-argued case that qualia are quantum in nature. He asserts that all conscious experience

arises from quantum processes occurring in the brains’ neurons’ microtubules,

which are a component of mammalian cell’s internal cytoskeletal structure. A good discussion of the shortcomings of

this hypothesis is here,

at SL4’s wiki. While I am

more than willing to entertain Penrose’s stance, I do not agree with him. As such, I have tried to generate a model of

consciousness that does not rely upon quantum phenomena (not to say that

quantum effects cannot be a part of consciousness, only that they are not

critical to it). Some work that I

produced in exploring my ideas regarding the nature of consciousness can be

seen here,

on the AI section of my website. The

three images, named neuralnet 1-3 (.jpeg format) are why I pointed you to the

page; the first image

outlines an older AGI architecture that I am no longer developing actively.

As

I first began seriously working on a model of intelligence in early December

2002, the area of natural language parsing was on my mind quite a bit. This tied in with the symbolic language I

was developing, ISL. Gradually, I began

to question whether or not language really plays a crucial role in

intelligence. This brief exchange on the [agi] list, illustrates where I was

headed during the 2002 holidays

|

|

I

included the above to give you a glimpse into another aspect of AI that I was

exploring at the time. I will return to

the theoretical and philosophical side of Artificial Intelligence in a while,

but for now I would like to switch to a more pragmatic point of view and

discuss some topics involving the implementation of such a demanding

application as AI.

Part

Two: Computational architectures

reconsidered

“Before the GRAPE computers were developed, astrophysicists in need

of computers that could execute highly complex computations had been

disappointed to find that computer speed lagged far behind expectations…. The

lag results in part because computer chips are generalized and required to

perform multiple tasks that may not be related to the same goal….. group of

astrophysicists at the University of Tokyo took matters into their own hands

over ten years ago and created a chip with one specific purpose: to keep track

of the gravitational interactions….One of the computers, the GRAPE-4, was the

world's fastest computer from 1995 to 1997”

-From an abstract which appeared in Science magazine http://xxx.lanl.gov/abs/astro-ph/9811418

The article describes the

accomplishments

of the GRAPE project, a joint effort

between several prestigious Japanese Universities.

The above quote sums up a situation which is prevalent in almost all areas of computer hardware design: The overemphasis on powerful general purpose CPU’s as the foundation of most commercially available architectures. The underlining in the above excerpt is mine, which I added to highlight the key point that I wish to address. In no way do I discount the importance or necessity of general-purpose computation; rather I question whether or not it is essentially ‘overused’.

Some dedicated-purpose hardware has become quite common even in consumer level personal computers. Graphics accelerators and sound cards are the most notable examples, though many of the chips on the system-board are in fact dedicated hardware, controlling things such as hard drives, memory access, etc. Though important, these tasks do not require the massive resources that modern computer games’ sounds and graphics do. If you have one, get out your copy of Quake or Quake 2 and play it first in software rendering mode, then switch over to hardware rendering. Quake 1 is an especially good example, as it came to market more or less at the same time as the first affordably priced 3d accelerators. What a world of difference it made when I installed a 4mb S3 virge accelerator in my p166mmx back in 1996…

MalleableWare – My first try at designing AI-dedicated

hardware

In the context of games, there have been of late some serious speculations about the merits of dedicated hardware to handle game physics and other such CPU-cycle hungry processes that are increasingly common in modern PC games. But why not take this approach to its logical conclusion? That is where the idea for MalleableWare (MW) came from. Idly playing around with the idea of an all dedicated hardware PC, the continual problem was the lack of flexibility that is the trade-off of reliance on hardwired logic. What about FPGA’s…..? They are expensive to be sure, but if you placed a small FPGA unit on-die with hard logic, and interfaced the two, costs would dramatically decrease with economies of scale.

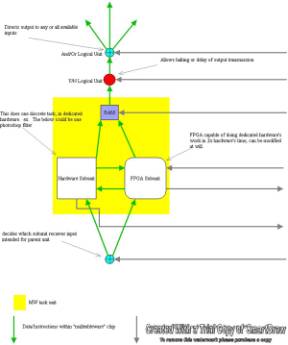

The

general design of a MalleableWare chip’s fundamental building block (click to enlarge)

The illustration above shows the basic design of a MalleableWare task unit. A single MW chip could contain several to several hundred of these units, linked together however the designers see fit. The original, albeit incomplete, MW document is here. The FPGA subunits on a MW chip are not intended to do much heavy lifting, so to speak. Rather they are intended to allow incremental patches and upgrades. Incidentally to the topic of AI, the implications of widespread industry adoption of MW architecture and design are mind-blowing. These implications are going to be the focus of a forthcoming paper, but just because I feel like it, I’m going to list what I feel are a few important points.

|

MW and the software

industry If the software industry became the MalleableWare industry: |

|

1) 95%

of software piracy would cease to exist, without any DRM, copy-protection, or

other such nonsense. 2) An

average application would gain a performance jump by at least a factor of 10. 3) End-user prices would drop significantly,

since piracy would no longer be an issue. 4) The PC architecture necessitated by an

industry-wide migration to MW architectures would be far more robust than current design paradigms. |

Artificial Life, Chaos and Complexity Theory, and the like have been of interest to me for a long time. When I was in middle school, I was given “Chaos: Making a New Science” by James Gleick. It was a revelation, seeing the hidden patterns lurking behind so much of everyday experience.